Interest in Docker has been growing year after year, and this is no surprise. Docker is a great tool to orchestrate development environment for software projects. It enables us to create environment agnostic packages that can be used for different environments - development, QA, staging - and easily deploy to production.

Using Docker allows us to have the same configuration on developer computers and in the production. You won’t hear “works on my machine” anymore.

Eliminating the issue of having different environments on different machines makes it easier for developers to work on the app throughout its life cycle. The mantra is: “build once, run anywhere.”

And as if this wasn’t enough already, enter Docker orchestration! There are many container orchestration tools out there like Kubernetes, Rancher, and Cloud Foundry’s Diego. Also, big cloud provides used the opportunity to provide this as a service like Amazon ECS, Google Container Engine, and Azure Container Service. They are all great solutions, and picking one over the other is more the matter of preferred cloud provider.

In our case that’s AWS. As our needs matured Amazon was releasing new services on AWS which helped us scale our business operations.

Elastic Container Service

As mentioned earlier, AWS ECS is Docker orchestration on Amazon and with ECR it is a powerful tool to run software applications with ease, no matter if they are monolithic apps or apps based on microservices. Here are some of the basics concepts that you should get familiar with.

ECR

Amazon Elastic Container Registry (ECR) is a private Docker registry. It is an inexpensive solution to host all of your internal Docker images.

By creating project-specific Docker images and placing them on the ECR, developers just need to authenticate against ECR and they will be able to pull in and launch project without setting up complex services and going through extensive set of developer documentation.

Using Docker images developers can run the project in minutes and start focusing on the task at hand instead on configuration - serious developer productivity boost!

There are 3 prerequisites here:

- Account that can pull from ECR

- AWS CLI installed

- Docker installed

Before running a Docker command, users need to login by running: eval $(aws ecr get-login)

ECS

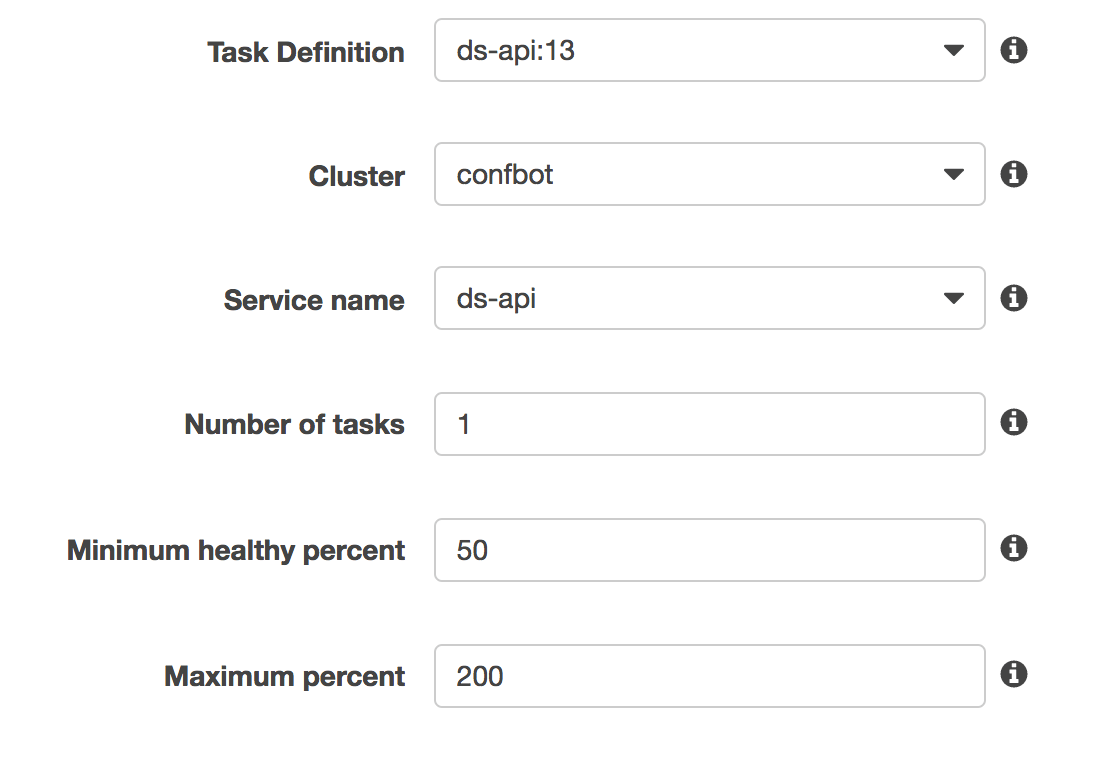

ECS revolves around 3 concepts: Tasks, Services and Clusters.

Tasks is a place where you define what job needs to be done. It is used to specify details about Docker images to run, needed resources, networking, and more. You can read about all the available task definitions in the official AWS developer guide. Tasks can be created from JSON file or in the UI.

Services are a way to run long running stateless tasks. This is what you will be creating for running your apps and it is one way to run tasks. The other way is to run them manually on demand or by utilizing Cron; hint - you can use CloudWatch Events to achieve this.

Clusters help you group tasks and services and provide a way to make a pool of EC2 instances that will be used to run tasks.

In order for the EC2 image to be registered in the Cluster it should run Amazon ECS Container Agent. Amazon has ECS optimized images ready and you can use them to run your containers. If you are running the environment in staging you can run just one EC2 instance, but for production it is recommended that you setup auto scaling group that will scale up and down based on the load and allow for high availability.

Configuring all of this would be too much for one blog post. If you’re interested in this topic, please leave us a comment and we will create more AWS-specific posts that will go into more detail.

Deploying an app to AWS with nginx and SSL

Let’s say you have followed express.js Hello World example guide. Now you have node_modules/ directory, app.js and package.json files. In order to be able to start a container with it, we first have to make an image definition of it. Create a file named Dockerfile with the following contents:

RUN mkdir /usr/src/app

ADD package.json /usr/src/app

ADD app.js /usr/src/app

WORKDIR /usr/src/app

RUN npm i

CMD node app.js

EXPOSE 3000Then, to build the image, run docker build -t myapp.

After it is built, you can try it out using docker run -it --rm -p 8000:3000 myapp

Open http://localhost:8000 in browser to see your app in action. Container exposes port 3000, but the command maps it to 8000 on the host machine.

Now it’s time to push it to AWS ECR. Go to the AWS Console, navigate to Elastic Container Service, and then Repositories. Create a repository named myapp, and follow the instructions to push your image.

After it’s done, we need to create the Task Definition for our service. Go to Elastic Container Service, and then Task Definitions. Click Create new Task Definition, select EC2 launch type compatibility, name it myapp, and then we’ll proceed creating four volumes and three containers.

Create by pasting and reviewing following JSON:

{

"containerDefinitions": [{ "dnsSearchDomains": null, "logConfiguration": null, "entryPoint": null, "portMappings": [ { "hostPort": 80, "protocol": "tcp", "containerPort": 80 }, { "hostPort": 443, "protocol": "tcp", "containerPort": 443 } ], "command": null, "linuxParameters": null, "cpu": 0, "environment": [ { "name": "VIRTUAL_PORT", "value": "443" } ], "ulimits": null, "dnsServers": null, "mountPoints": [ { "readOnly": true, "containerPath": "/etc/nginx/certs", "sourceVolume": "certs" }, { "readOnly": null, "containerPath": "/etc/nginx/vhost.d", "sourceVolume": "vhosts" }, { "readOnly": null, "containerPath": "/usr/share/nginx/html", "sourceVolume": "www" }, { "readOnly": true, "containerPath": "/tmp/docker.sock", "sourceVolume": "docker" } ], "workingDirectory": null, "dockerSecurityOptions": null, "memory": 64, "memoryReservation": 32, "volumesFrom": [], "image": "jwilder/nginx-proxy", "disableNetworking": null, "essential": true, "links": null, "hostname": null, "extraHosts": null, "user": null, "readonlyRootFilesystem": null, "dockerLabels": { "com.github.jrcs.letsencrypt_nginx_proxy_companion.nginx_proxy": "" }, "privileged": null, "name": "proxy" }, { "dnsSearchDomains": null, "logConfiguration": null, "entryPoint": null, "portMappings": [], "command": null, "linuxParameters": null, "cpu": 0, "environment": [], "ulimits": null, "dnsServers": null, "mountPoints": [ { "readOnly": null, "containerPath": "/etc/nginx/certs", "sourceVolume": "certs" }, { "readOnly": true, "containerPath": "/var/run/docker.sock", "sourceVolume": "docker" } ], "workingDirectory": null, "dockerSecurityOptions": null, "memory": 64, "memoryReservation": 32, "volumesFrom": [ { "sourceContainer": "proxy", "readOnly": null } ], "image": "jrcs/letsencrypt-nginx-proxy-companion", "disableNetworking": null, "essential": true, "links": null, "hostname": null, "extraHosts": null, "user": null, "readonlyRootFilesystem": null, "dockerLabels": null, "privileged": null, "name": "letsencrypt" }, { "dnsSearchDomains": null, "logConfiguration": null, "entryPoint": null, "portMappings": [], "command": null, "linuxParameters": null, "cpu": 0, "environment": [ { "name": "LETSENCRYPT_EMAIL", "value": "you@yourdomain.com" }, { "name": "LETSENCRYPT_HOST", "value": "yourdomain.com" }, { "name": "VIRTUAL_HOST", "value": "yourdomain.com" } ], "ulimits": null, "dnsServers": null, "mountPoints": [], "workingDirectory": null, "dockerSecurityOptions": null, "memory": 64, "memoryReservation": 32, "volumesFrom": [], "image": "977016000971.dkr.ecr.us-east-1.amazonaws.com/myapp:latest", "disableNetworking": null, "essential": true, "links": null, "hostname": null, "extraHosts": null, "user": null, "readonlyRootFilesystem": null, "dockerLabels": null, "privileged": null, "name": "app" } ], "placementConstraints": [], "memory": null, "requiresCompatibilities": [ "EC2" ], "networkMode": null, "cpu": null, "volumes": [ { "name": "certs", "host": { "sourcePath": "/home/outdoor/certs" } }, { "name": "vhosts", "host": { "sourcePath": "/home/outdoor/vhosts" } }, { "name": "www", "host": { "sourcePath": "/home/outdoor/www" } }, { "name": "docker", "host": { "sourcePath": "/var/run/docker.sock" } }, { "name": "database", "host": { "sourcePath": "/home/outdoor/data" } } ], "family": "myapp" }

After creating a task definition, create a cluster, map the configured domain to it, and create a service based on this task definition. Your service should now be up and running!