From autonomous vehicles to remote patient monitoring, the Internet of Things (IoT) is transforming entire industries. As connected devices multiply into the tens of billions, the question isn’t just how we collect data but where we process it.

For technology leaders the decision between Edge Computing and Cloud Computing isn’t binary. It’s a strategic calculation that determines how you scale, what you spend, and how fast your systems respond in mission-critical moments.

Let’s break down the trade-offs, practical use cases, and how hybrid architectures offer the best of both worlds.

Cloud Computing: Centralized Power, Unmatched Scale

The cloud is the backbone of modern computing. For IoT, it offers:

- Scalability: Cloud platforms like AWS IoT, Azure IoT Hub, and Google Cloud IoT can handle massive data volumes across globally distributed devices.

- Centralized Data Management: Aggregating and normalizing data from thousands of endpoints becomes manageable through cloud-native services.

- AI/ML Tooling: The cloud excels at training complex models using vast historical datasets, which would be impossible to store or process at the edge.

- Cost Flexibility: With pay-as-you-go pricing, teams can avoid large capital expenditures and scale resources as needed.

- Global Accessibility: Teams can manage IoT fleets, run analytics, and deploy updates from anywhere.

Use Case: In a city-wide smart traffic system, sensor data from intersections is pushed to the cloud for congestion analysis, route optimization, and long-term planning models. The cloud aggregates this across districts, enabling centralized control dashboards and urban mobility simulations.

But cloud-only architectures hit limitations when real-time action is required.

Cloud Limitations:

- Latency: A round trip to the cloud introduces delays—unacceptable for real-time systems like industrial safety protocols or autonomous navigation.

- Bandwidth Costs: Pushing all raw data upstream, especially via cellular or satellite links, is expensive and inefficient.

- Reliability: Cloud systems depend on constant connectivity. In environments with spotty coverage (e.g., mines, ships, farms), operations can stall.

- Privacy & Compliance: Sending sensitive raw data to a public cloud raises regulatory red flags, especially under GDPR, HIPAA, or CCPA.

- Power Consumption: Continuous data transmission drains batteries, shortening the lifespan of remote or embedded devices.

Edge Computing: Intelligence Where It Matters

Edge computing moves data processing closer to the source—on the device itself or nearby nodes (like edge gateways or local servers).

Its core strengths include:

- Ultra-Low Latency: No cloud round-trips. Perfect for robotics, autonomous vehicles, and machine control.

- Reduced Bandwidth Load: Devices filter or summarize data locally, sending only actionable insights to the cloud.

- Offline Operation: Edge nodes continue functioning without an internet connection.

- Enhanced Data Security: Sensitive data can be anonymized or processed locally to reduce risk exposure.

- Power Efficiency: Less data transmission = lower energy usage, a critical benefit for battery-powered or solar-powered sensors.

Use Case: In an oil rig monitoring system, vibration sensors use edge inference to detect anomalies in real-time. The device flags only outliers and critical thresholds to the cloud, dramatically reducing bandwidth and enabling proactive maintenance even with intermittent connectivity.

Edge Challenges:

- Limited Resources: Edge devices can’t match the compute or storage of centralized cloud servers.

- Device Management Complexity: Securing, updating, and orchestrating hundreds (or thousands) of distributed edge nodes requires robust DevOps pipelines.

- Hardware Maintenance: Edge nodes are physical assets, often deployed in hard-to-reach locations like offshore platforms or embedded in heavy machinery.

- Security at Scale: Each device becomes a potential attack surface. Zero-trust architecture and over-the-air (OTA) patching become essential.

The Hybrid Model: Best of Both Worlds

Most modern IoT architectures aren’t choosing edge or cloud, they’re blending both.

This hybrid approach distributes computation intelligently across three layers:

- Device Layer: Sensor nodes capture data and may perform light filtering or local control actions.

- Edge Layer: Gateways or industrial PCs aggregate data, run real-time AI inferences, and ensure fail-safe operation if disconnected.

- Cloud Layer: Centralized storage, cross-site analytics, AI model training, and remote management tools live in the cloud.

Example: Smart Agriculture

- At the edge: Soil moisture sensors and weather stations decide when to trigger irrigation locally.

- In the cloud: Aggregated data from multiple farms informs crop yield forecasts and region-wide water management policies.

Example: Connected Cars

- Edge: The vehicle’s onboard computer handles real-time braking and obstacle detection.

- Cloud: Fleet-wide data is used for predictive maintenance, map updates, and traffic analytics.

The hybrid model ensures both real-time responsiveness and strategic oversight, without overwhelming your budget or network.

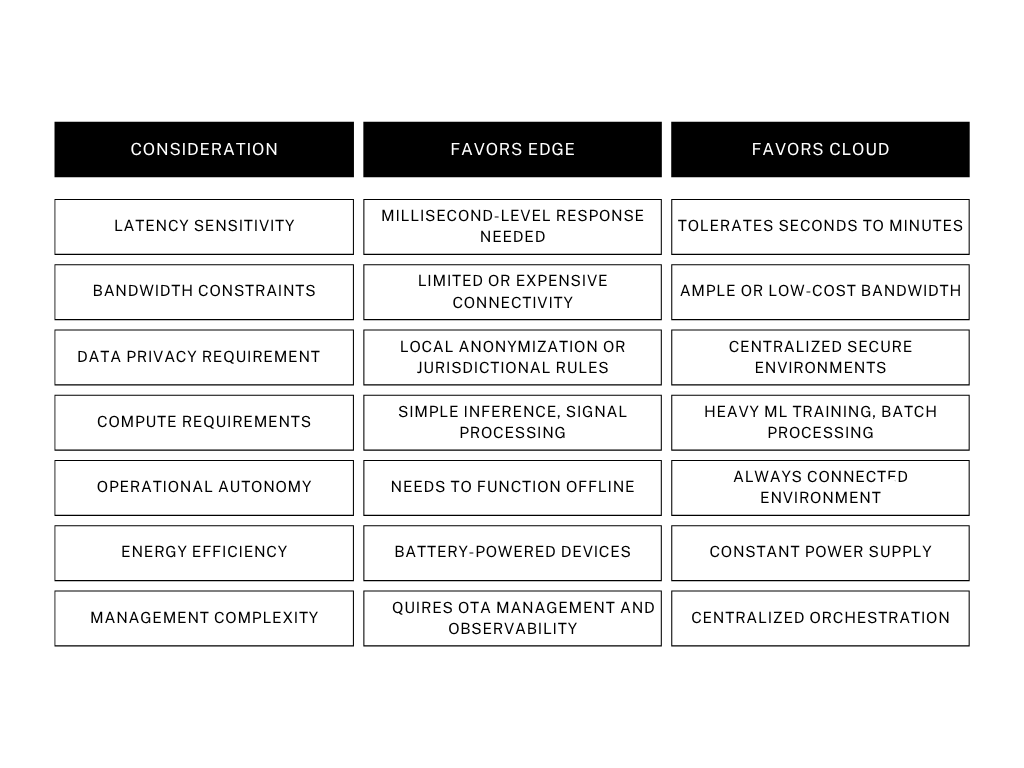

Key Decision Factors for Workload Distribution

When architecting your system, balance these core variables:

Financial Implications: Don’t Let the Cloud Invoice Surprise You

The cloud’s convenience can become costly at scale. Consider:

- Data Egress Fees: Cloud providers often charge for data leaving their network—especially burdensome for video or sensor-heavy applications.

- Bandwidth Costs: Especially in cellular or satellite deployments, unfiltered data transmission can become your largest expense.

- Edge CapEx vs. Cloud OpEx: Edge devices require upfront investment but may lower recurring cloud and network costs over time.

- Lifecycle Costs: Edge maintenance and security updates must be accounted for—but can be automated with the right orchestration tools.

- Energy Efficiency: Edge processing reduces wireless transmissions, extending device lifespans and lowering field servicing needs.

Pro tip: Always model your total cost of ownership (TCO) across both infrastructure and operations—some of the most scalable IoT companies have learned this the hard way.

What’s Next: Edge and Cloud Are Converging

The future isn’t either/or—it’s tighter integration.

- TinyML & Edge AI: Running inference on microcontrollers unlocks smart capabilities without heavy compute.

- 5G & Beyond: Higher throughput and lower latency enable richer cloud interactions, but won't eliminate edge needs.

- Serverless at the Edge: Frameworks like AWS Greengrass and Azure IoT Edge are making deployment and orchestration more developer-friendly.

- Digital Twins: Synchronizing physical and virtual systems relies on real-time edge data to simulate, predict, and optimize.

Takeaways

For modern IoT, there is no one-size-fits-all solution. The best architectures recognize that performance, cost, and reliability vary by application, and must be balanced accordingly.

By designing hybrid systems that process data at the edge when speed matters and in the cloud when scale matters, technical leaders can build smarter, leaner, and more resilient IoT solutions.

The future of IoT isn’t on the edge or in the cloud. It’s intelligently distributed.